main page

Explode! for gamers

rules

Download

Credits

version infos

Explode! for developers

AI development

depth-first search

breadth-first search

rule of thumb

Android development

earning money

Explode! for gamers

rules

Download

Credits

version infos

Explode! for developers

AI development

depth-first search

breadth-first search

rule of thumb

Android development

earning money

My second trial (after depth-first search) was a breadth-first search.

In general the breadth-first search comes along with some advantages over depth-first search. Advantage number one is that you can set a time limit and let the algorithm check every possible move as good or as bad as every other possible move. Advantage number two is that the algorithm can absorb some disatvantages of the depth-first search algorithm.

The biggest disatvantage of the bredth-first search is that you have to save or cache many different game states at once. This leads to a massive memory use. Good to know we are able to decrease the needed amount of memory and the number of calculations needed for movement analyzation.

If we implement the breadth-first search algorithm without any optimization, from these 16 states we get another 16 * 4 = 64 following states and then 64 * 4 = 256 states and so on.

Of course we won't stop at 4 following states at a time, because sooner or later each player will get new possible moves by expanding on the board - until one player gets up to 24 possible moves.

Fortunately since half move number 3, most of the possible states aren't unique. Assuming that red player upgrades field number 1, then blue player increases field 25 and red player finally increases field number 2. Then we have a special state "A". If red player first increased field 2, then blue player increased field 25 and finally red player increased field 1, then we would get the same state "A".

If the algorithm uses lists called "possible states after X+1 half moves", and if it caches every unique reached half move, we can decrease the number of "different" states we really have to check and evaluate.

I tested the algorithm using such an optimization and realized that the app saves an amazing amount of calculation time and memory. Finally we get further long range synergy effects after X+2, X+3 or more half moves.

Worse: Although the P920 offers 64 MB memory for a single application, I got OutOfMemory exceptions soon.

Of course we can further decrease needed memory per object which caches a game state. But we have to consider many phones don't even offer half of that amount of memory. And a notable amount of that memory is reserved for ingame graphics.

At last I decided to develop a weighting algorithm, that doesn't need to calculate move after move, but to recognize tactical situations and rate them in a more human manner. You can read about it in the next chapter.

In general the breadth-first search comes along with some advantages over depth-first search. Advantage number one is that you can set a time limit and let the algorithm check every possible move as good or as bad as every other possible move. Advantage number two is that the algorithm can absorb some disatvantages of the depth-first search algorithm.

The biggest disatvantage of the bredth-first search is that you have to save or cache many different game states at once. This leads to a massive memory use. Good to know we are able to decrease the needed amount of memory and the number of calculations needed for movement analyzation.

An optimization

The game always starts with the same initial situation: both players own four fields. This implies that player 1 can choose one of four different possible moves in her turn (which lead to four different subsequent situations.) From now on the player 2 can choose from one of four possibles moves but based on each of the four possible initial situations. After 2 half moves already 16 different game states exist.If we implement the breadth-first search algorithm without any optimization, from these 16 states we get another 16 * 4 = 64 following states and then 64 * 4 = 256 states and so on.

Of course we won't stop at 4 following states at a time, because sooner or later each player will get new possible moves by expanding on the board - until one player gets up to 24 possible moves.

Fortunately since half move number 3, most of the possible states aren't unique. Assuming that red player upgrades field number 1, then blue player increases field 25 and red player finally increases field number 2. Then we have a special state "A". If red player first increased field 2, then blue player increased field 25 and finally red player increased field 1, then we would get the same state "A".

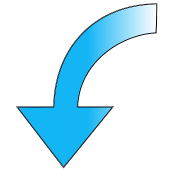

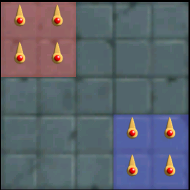

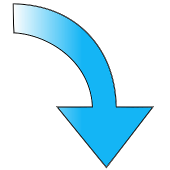

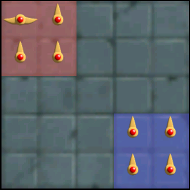

An example: different moves can lead to the same following state.

|  common initial situation |  |

situation 1 after a move |  alternative situation 2 after another move | |

situation after the opponent's move |  situation after the opponent's move | |

|  common situation |  |

If the algorithm uses lists called "possible states after X+1 half moves", and if it caches every unique reached half move, we can decrease the number of "different" states we really have to check and evaluate.

I tested the algorithm using such an optimization and realized that the app saves an amazing amount of calculation time and memory. Finally we get further long range synergy effects after X+2, X+3 or more half moves.

out of memory...

When I tested this algorithm with a reasonable depth of half moves (10 or higher), I realized that the needed amount of memory increased rapidly. The time needed by the garbage collector nullified the reduction of needed calculations soon.Worse: Although the P920 offers 64 MB memory for a single application, I got OutOfMemory exceptions soon.

Of course we can further decrease needed memory per object which caches a game state. But we have to consider many phones don't even offer half of that amount of memory. And a notable amount of that memory is reserved for ingame graphics.

At last I decided to develop a weighting algorithm, that doesn't need to calculate move after move, but to recognize tactical situations and rate them in a more human manner. You can read about it in the next chapter.